Note: this is a followup of episode 1 and episode 3.

Bonjour,

After a few weeks I came back to continue the work. Unfortunately the packages were removed to save space and it failed.

$ teuthology-suite --machine-type smithi --suite rados/standalone/workloads/c2c.yaml --priority 50 --email loic@dachary.org --suite-branch wip-mempool-cacheline-49781 --suite-repo https://lab.fedeproxy.eu/ceph/ceph --ceph wip-mempool-cacheline-49781

2021-04-24 16:01:12,944.944 INFO:teuthology.suite:Using random seed=9061

2021-04-24 16:01:12,945.945 INFO:teuthology.suite.run:kernel sha1: distro

2021-04-24 16:01:13,278.278 INFO:teuthology.suite.run:ceph sha1: b8ab6380adfc028da8166704dbc1755260226375

2021-04-24 16:01:13,278.278 INFO:teuthology.suite.util:container build centos/8, checking for build_complete

2021-04-24 16:01:13,436.436 INFO:teuthology.suite.util:build not complete

2021-04-24 16:01:13,437.437 INFO:teuthology.suite.run:ceph version: None

warning: redirecting to https://lab.fedeproxy.eu/ceph/ceph.git/

warning: redirecting to https://lab.fedeproxy.eu/ceph/ceph.git/

2021-04-24 16:01:14,800.800 INFO:teuthology.suite.run:ceph branch: wip-mempool-cacheline-49781 864e22a77ccac23454407573c125a17c55138eb7

2021-04-24 16:01:14,814.814 INFO:teuthology.repo_utils:Fetching wip-mempool-cacheline-49781 from origin

2021-04-24 16:01:16,025.025 INFO:teuthology.repo_utils:Resetting repo at /home/dachary/src/lab.fedeproxy.eu_ceph_ceph_wip-mempool-cacheline-49781 to origin/wip-mempool-cacheline-49781

2021-04-24 16:01:17,199.199 INFO:teuthology.suite.run:teuthology branch: master 2713a3cd31b17738a50039eaa9d859b5dc39fb8a

2021-04-24 16:01:17,210.210 INFO:teuthology.suite.run:Suite rados:standalone:workloads:c2c.yaml in /home/dachary/src/lab.fedeproxy.eu_ceph_ceph_wip-mempool-cacheline-49781/qa/suites/rados/standalone/workloads/c2c.yaml generated 1 jobs (not yet filtered)

2021-04-24 16:01:17,219.219 INFO:teuthology.suite.util:container build centos/8, checking for build_complete

2021-04-24 16:01:17,365.365 INFO:teuthology.suite.util:build not complete

2021-04-24 16:01:17,365.365 ERROR:teuthology.suite.run:Packages for os_type 'None', flavor basic and ceph hash 'b8ab6380adfc028da8166704dbc1755260226375' not found

Job scheduled with name dachary-2021-04-24_16:01:12-rados:standalone:workloads:c2c.yaml-wip-mempool-cacheline-49781-distro-basic-smithi and ID 6071217

2021-04-24 16:01:19,251.251 INFO:teuthology.suite.run:Scheduling rados:standalone:workloads:c2c.yaml

Traceback (most recent call last):

File "/home/dachary/venv/bin/teuthology-suite", line 33, in <module>

sys.exit(load_entry_point('teuthology', 'console_scripts', 'teuthology-suite')())

File "/home/dachary/teuthology/scripts/suite.py", line 189, in main

return teuthology.suite.main(args)

File "/home/dachary/teuthology/teuthology/suite/__init__.py", line 143, in main

run.prepare_and_schedule()

File "/home/dachary/teuthology/teuthology/suite/run.py", line 397, in prepare_and_schedule

num_jobs = self.schedule_suite()

File "/home/dachary/teuthology/teuthology/suite/run.py", line 644, in schedule_suite

self.schedule_jobs(jobs_missing_packages, jobs_to_schedule, name)

File "/home/dachary/teuthology/teuthology/suite/run.py", line 496, in schedule_jobs

name,

File "/home/dachary/teuthology/teuthology/suite/util.py", line 76, in schedule_fail

raise ScheduleFailError(message, name)

teuthology.exceptions.ScheduleFailError: Scheduling dachary-2021-04-24_16:01:12-rados:standalone:workloads:c2c.yaml-wip-mempool-cacheline-49781-distro-basic-smithi failed: At least one job needs packages that don't exist for hash b8ab6380adfc028da8166704dbc1755260226375.

I asked Nathan to repush the branch so they are built again. Once built, I will be able to schedule the job with the command above. Fortunately the script part of this performance test does not require rebuilding all the Ceph packages when they are modified. They can conveniently be pulled from my repository with the options:

... --suite-branch wip-mempool-cacheline-49781 --suite-repo https://lab.fedeproxy.eu/ceph/ceph ...

While waiting for Nathan to act on my behalf, I tried to grab a physical machine to build Ceph to manually run the test script. But it failed for an unknown reason and I asked irc.oftc.net#ceph-devel if someone had a similar experience.

(venv) dachary@teuthology:~/teuthology$ teuthology-lock --lock-many 1 --machine-type smithi --owner loic@dachary.org

2021-04-24 16:41:08,805.805 INFO:teuthology.lock.ops:Start node 'ubuntu@smithi193.front.sepia.ceph.com' reimaging

2021-04-24 16:41:08,806.806 INFO:teuthology.lock.ops:Updating [ubuntu@smithi193.front.sepia.ceph.com]: reset os type and version on server

2021-04-24 16:41:08,806.806 INFO:teuthology.lock.ops:Updating smithi193.front.sepia.ceph.com on lock server

2021-04-24 16:41:08,818.818 INFO:teuthology.lock.ops:Node 'ubuntu@smithi193.front.sepia.ceph.com' reimaging is complete

2021-04-24 16:41:09,026.026 INFO:teuthology.provision.fog.smithi193:Scheduling deploy of ubuntu 18.04

2021-04-24 16:41:09,482.482 INFO:teuthology.orchestra.console:Power off smithi193

2021-04-24 16:41:17,851.851 INFO:teuthology.orchestra.console:Power off for smithi193 completed

2021-04-24 16:41:17,952.952 INFO:teuthology.orchestra.console:Power on smithi193

2021-04-24 16:41:26,312.312 INFO:teuthology.orchestra.console:Power on for smithi193 completed

2021-04-24 16:41:26,413.413 INFO:teuthology.provision.fog.smithi193:Waiting for deploy to finish

...

2021-04-24 16:55:36,782.782 ERROR:paramiko.transport:paramiko.ssh_exception.SSHException: Error reading SSH protocol banner

teuthology.exceptions.MaxWhileTries: reached maximum tries (100) after waiting for 600 seconds

Fortunately Nathan replied quickly and I was able to submit the job:

(venv) dachary@teuthology:~/teuthology$ teuthology-lock --unlock --owner loic@dachary.org smithi193.front.sepia.ceph.com

2021-04-24 16:56:57,276.276 INFO:teuthology.lock.ops:unlocked smithi193.front.sepia.ceph.com

(venv) dachary@teuthology:~/teuthology$ teuthology-lock --lock-many 1 --machine-type mira --owner loic@dachary.org^C

(venv) dachary@teuthology:~/teuthology$ teuthology-suite --machine-type smithi --suite rados/standalone/workloads/c2c.yaml --priority 50 --email loic@dachary.org --suite-branch wip-mempool-cacheline-49781 --suite-repo https://lab.fedeproxy.eu/ceph/ceph --ceph wip-mempool-cacheline-49781

2021-04-24 18:20:26,767.767 INFO:teuthology.suite:Using random seed=1777

2021-04-24 18:20:26,768.768 INFO:teuthology.suite.run:kernel sha1: distro

2021-04-24 18:20:26,954.954 INFO:teuthology.suite.run:ceph sha1: 864e22a77ccac23454407573c125a17c55138eb7

2021-04-24 18:20:26,955.955 INFO:teuthology.suite.util:container build centos/8, checking for build_complete

2021-04-24 18:20:27,270.270 INFO:teuthology.suite.run:ceph version: 17.0.0-2602.g864e22a7

warning: redirecting to https://lab.fedeproxy.eu/ceph/ceph.git/

warning: redirecting to https://lab.fedeproxy.eu/ceph/ceph.git/

2021-04-24 18:20:28,833.833 INFO:teuthology.suite.run:ceph branch: wip-mempool-cacheline-49781 864e22a77ccac23454407573c125a17c55138eb7

2021-04-24 18:20:28,846.846 INFO:teuthology.repo_utils:Fetching wip-mempool-cacheline-49781 from origin

2021-04-24 18:20:29,882.882 INFO:teuthology.repo_utils:Resetting repo at /home/dachary/src/lab.fedeproxy.eu_ceph_ceph_wip-mempool-cacheline-49781 to origin/wip-mempool-cacheline-49781

2021-04-24 18:20:30,739.739 INFO:teuthology.suite.run:teuthology branch: master 2713a3cd31b17738a50039eaa9d859b5dc39fb8a

2021-04-24 18:20:30,750.750 INFO:teuthology.suite.run:Suite rados:standalone:workloads:c2c.yaml in /home/dachary/src/lab.fedeproxy.eu_ceph_ceph_wip-mempool-cacheline-49781/qa/suites/rados/standalone/workloads/c2c.yaml generated 1 jobs (not yet filtered)

2021-04-24 18:20:30,760.760 INFO:teuthology.suite.util:container build centos/8, checking for build_complete

Job scheduled with name dachary-2021-04-24_18:20:26-rados:standalone:workloads:c2c.yaml-wip-mempool-cacheline-49781-distro-basic-smithi and ID 6071218

2021-04-24 18:20:31,953.953 INFO:teuthology.suite.run:Scheduling rados:standalone:workloads:c2c.yaml

Job scheduled with name dachary-2021-04-24_18:20:26-rados:standalone:workloads:c2c.yaml-wip-mempool-cacheline-49781-distro-basic-smithi and ID 6071219

2021-04-24 18:20:32,886.886 INFO:teuthology.suite.run:Suite rados:standalone:workloads:c2c.yaml in /home/dachary/src/lab.fedeproxy.eu_ceph_ceph_wip-mempool-cacheline-49781/qa/suites/rados/standalone/workloads/c2c.yaml scheduled 1 jobs.

2021-04-24 18:20:32,886.886 INFO:teuthology.suite.run:0/1 jobs were filtered out.

Job scheduled with name dachary-2021-04-24_18:20:26-rados:standalone:workloads:c2c.yaml-wip-mempool-cacheline-49781-distro-basic-smithi and ID 6071220

2021-04-24 18:20:33,832.832 INFO:teuthology.suite.run:Test results viewable at http://pulpito.front.sepia.ceph.com:80/dachary-2021-04-24_18:20:26-rados:standalone:workloads:c2c.yaml-wip-mempool-cacheline-49781-distro-basic-smithi/

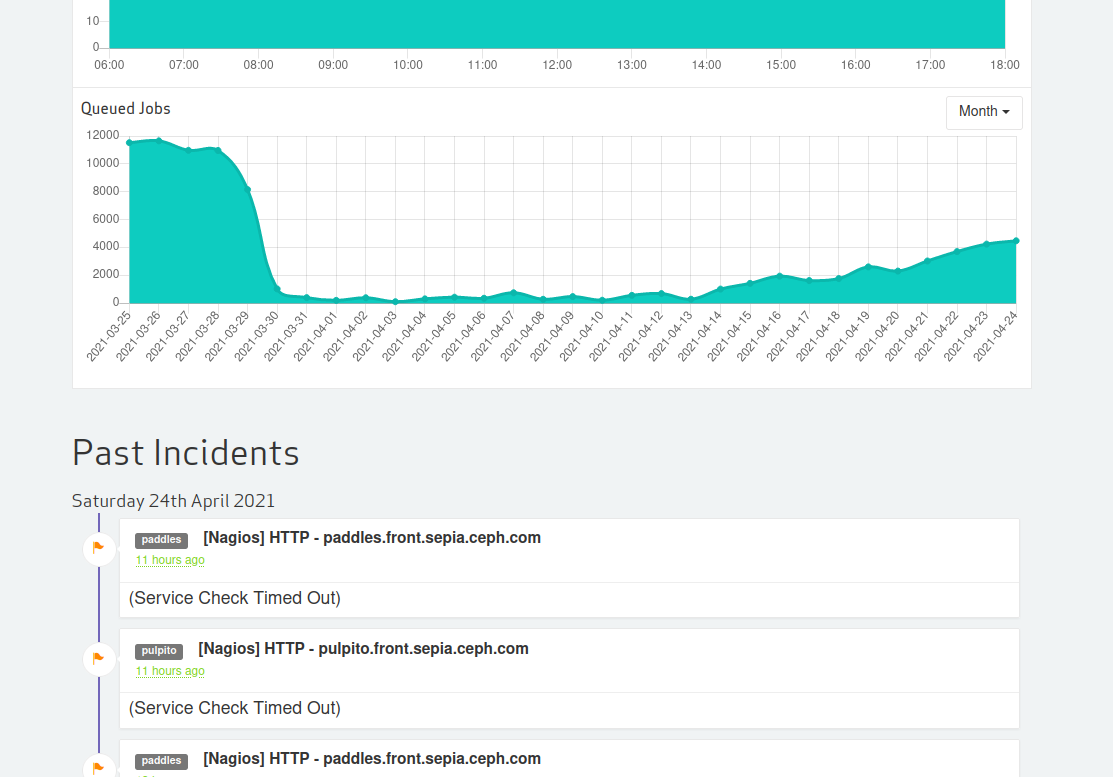

However, it looks like the Sepia lab is experiencing problems: the status page shows timeouts and the queue is growing:

It is probably best to wait a few days: it is unlikely to be fixed over the week-end. In the meantime I used a large server from another lab (128 cores, 1.5TB nvme, 700GB+ RAM) to try to better understand how perf c2c works.

To be continued.